The Neuromarketing Challenge: First Response

It’s been more than a year since I posted the first Neuromarketing Challenge, and we’ve just now received our first response. The challenge, in case you missed that post, was for neuromarketing firms to submit a detailed case study or white paper that demonstrated a successful application of neuromarketing techniques. There’s very little published academic research on the topic (see Neuromarketing Proof? UCLA Brain Scans Predict Ad Success for one paper), and client data is usually closely guarded by commercial firms. We issued the challenge to try to bring some additional data into public view.

Innerscope Research sent the first response. It’s not fully responsive to the challenge, as the detailed numeric data isn’t there for us to pick apart. But, it’s a start in the right direction. I’m going to post their response without editing. Feel free to chime in with questions and your thoughts on this in the comments.

From Innerscope Research:

—————————-

Innerscope Biometric Research Helps Mimoco Pre-Test Product Design Pipeline – Validation Case Study

There is a new breed of designer USB flash drives called MIMOBOTs that are a must-have for the gadget obsessed – an “it” accessory that is part pop art and part functional technology. These drives, made by Mimoco, are sold in a wide variety of retailers from museum shops to high-end department stores, as well as online, priced at about $20-$70 for eight to 64 gigabytes of memory, compared to the generic versions sold for $10 to $35.

As reported in The New York Times, Mimoco is the “Haagen Dazs” of designer flash drives. Innerscope Research partnered with Mimoco to study the emotional engagement of potential MIMOBOT users – tech-savvy, fashion-conscious, 20-somethings – as they reviewed a variety of designs. The product designs were split into two groups for passive evaluation while using Innerscope’s biometric technology:

(1) 30 designs that were already in-market with known sales performance

(2) 30 new designs that were being proposed for in-market use

If the emotional responses to (1) lined up with Mimoco’s sales data, the Company would feel confident in using the responses to (2) for selecting which designs to move into production.

There were two explicit goals for the study – test Innerscope’s ability to predict actual sales behavior and offer Mimoco specific insights on what design elements lead to higher sales. It was win-win for both parties, particularly for Mimoco which could use data based on what their target customer base found emotionally engaging on a nonconscious level to inform future design choices.

Part I: Predicting Online Sales Behavior

Out of their 300+ in-market designs at the time of the study, Mimoco provided a sampling of both best sellers and poor performers, including pop culture MIMOBOT character designs from the “Hello Kitty” and “Star Wars” brands. They did not share the sales data with any of us prior to fielding the study. It was truly blind validation research on a series of designs that represented over 100,000 unique purchases.

Data was collected using our Biometric Monitoring System™, which involves a medical grade biometric belt that measures moment-by-moment changes in heart rate, respiration, skin sweat, and motion in combination with eye tracking to gauge visual attention and cognitive processing. The individual responses were combined across the audience to determine the emotional response for each MIMOBOT design.

The correlation between the emotional response of 60 potential Mimobot users and Mimoco’s historical sales for the sample designs was 0.73, incredibly close to a perfect correlation of 1. This is a strong statistical indicator of the connection between designs that evoke high emotional response and those that achieve higher sales.

In fact, the designs we found to be most engaging, actually ranked #1, 3, 4, and 5 in sales among the dataset.

“I was amazed when I received the initial findings from Innerscope because the research correctly identified four of our five best-selling designs from the sample set,” said Dwight Schultheis, Mimoco Vice President. “These designs outsold the less engaging designs 3:1 in the marketplace. From that moment on, I was confident that Innerscope had the ability to truly measure our customers’ emotional engagement with our product.”

Part II: Identifying Design Elements that Work

Once Mimoco was convinced of the connection between emotional response and actual sales, we turned our attention to identifying design themes that emerged among the highest performers. This evaluation took into account all of the designs studied – both in-market and concepts under review.

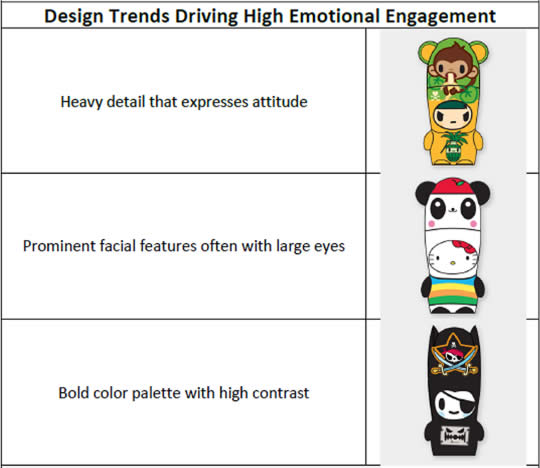

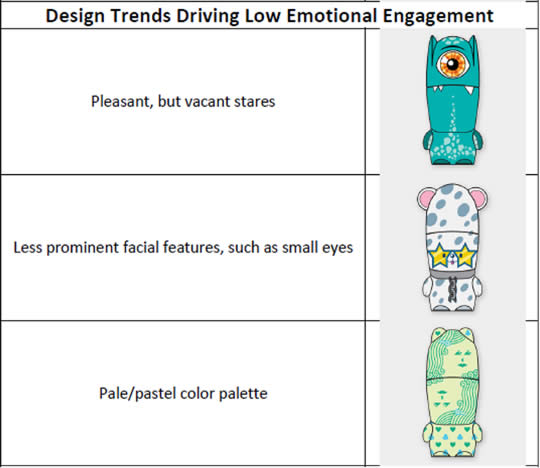

Across the board, we saw common elements in the high performers – prominent features often with large eyes and a bold color palette. The most engaging designs also tended to “express an attitude.” Interestingly, the inverse of these types (small eyes, pale colors, vacant stares) were common among the designs that generated low emotional engagement. Here is a chart of this looks like:

“The findings from Innerscope have had a material impact on Mimoco’s business,” said Schultheis. “Our ‘hit rate’ of producing ‘good’ and ‘great’ selling MIMOBOT designs has improved as a result of Innerscope’s design recommendations (namely, prominent eyes, bold colors and contrast, and design detail).”

Part III: Predicting Online Ad Response

We then applied these findings to an advertising scenario to explore what it could mean in terms of predicting future behavior. We created two versions of the same Mimobot online display ad and ran them on MySpace for roughly 200,000 exposures.

Version 1 used two of the most engaging Mimobot designs:

Version 2 featured a couple of the less engaging designs:

The ad featuring the most engaging Mimobots had 2x the number of clicks as the ad with the less engaging designs. This was a strong indicator that designs with high engagement lead to stronger ads.

In summary, our emotional response metric had a strong correlation to Mimoco’s sales data with the highly engaging designs clearly outselling the less engaging ones. We were also able to predict online advertising behavior – the emotionally engaging designs drove twice as much click-through behavior.

“In a consumer products business like Mimoco’s, the most basic criteria for success are that consumers love our product,” said Schultheis. “By identifying key design attributes of our MIMOBOT flash drive product, Innerscope handed us a recipe for success. We have more best-selling designs than ever before (and much less expensive and slow-selling inventory).”

We are confident that this case study offers direct evidence to the validity of using biometrics for measuring unconscious emotional engagement and to the long term value this research approach offers to brands.

——-End of Innerscope-provided content—–

If you can get past the occasional burst of press-release style prose, what do you think? Does this make the case for biometric measurements as a predictor of consumer preference?