Here’s Why Smart Marketers Use A/B Testing

How often are websites designed using “best practices” or by trusting the experience of a seasoned expert? The answer is, “all too frequently.”

In every speech I give, I offer practical advice on how to get better marketing results by using brain and behavior science. But, I also remind audiences of the importance of testing rather than blindly trusting my advice, not to mention the advice they get from books, articles, experts, and other sources. A/B testing is simple and much cheaper than missed conversions.

WhichTestWon (a site you should subscribe to!) just described the results of an experiment which shows why A/B testing is so important.

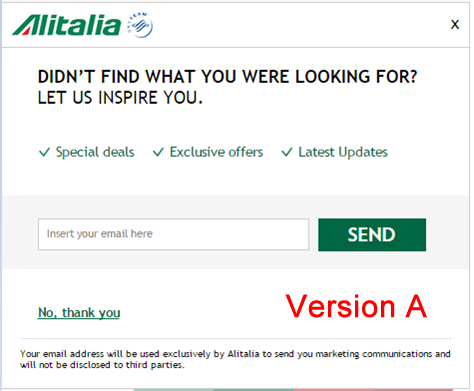

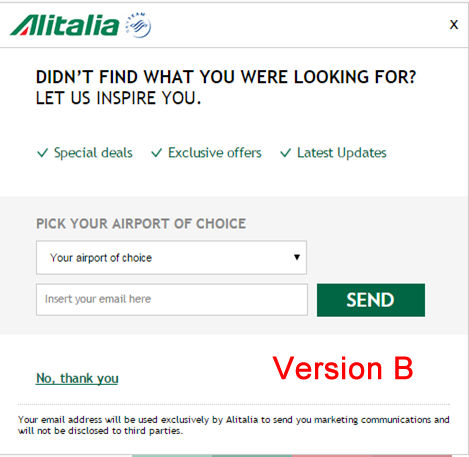

Their post describes an email-collection popup test for an airline site which ran in two markets, Italy and the UK.

The variation was simple – one version, “A,” asked for just the email, and the other, version “B,” had a drop-down selector for the visitor’s preferred airport of origin.

Conventional wisdom says that every form field you add reduces the conversion rate. So, unless you are using additional form fields to filter out unmotivated visitors, one field is usually better than two.

Here’s what the tests revealed, according to WhichTestWon:

In Italy, version B won by a landslide, increasing conversions by 80.9%. These results are based on over 650,000 unique visitors, with a confidence rate of 99.9%.

In the UK, however, version B was the clear loser. It created only a 0.63% lift, 81% less than Version A, the non-personalized email-only version.

The first surprise is that in Italy, the additional form field improved conversion in a dramatic fashion. So much for minimizing fields as a universal best practice.

The second surprise is that the UK market behaved nothing like the Italian market. If you saw results from just one set of A/B tests, you probably wouldn’t guess the results wouldn’t extend to other markets.

The WhichTestWon folks took a stab at explaining the results:

It’s likely that cultural differences between audiences affected behavior.

The team from Webtrends hypothesized that customer care is more highly valued in Italy. Italian users may have, therefore, viewed the additional form field as a positive attempt to create a more personalized web experience.

In contrast, UK visitors may have seen filling in the extra field as double the amount of work.

I am always very leery of after-the-fact explanations of human behavior, but that reasoning is as plausible as any other conclusion.

To me, the big takeaways from these tests have nothing to do with form fields. Here’s what I’d emphasize:

- Never rely on best practices as a substitute for testing.

- Never assume that even very strong test results will translate directly to similar, but not identical, situations.

Happy testing!